First Steps Toward Building an Autonomous Agentic AI for Cognitive Behavioral Therapy (CBT) in Psychological Counseling

Part I: Core Concepts and Conceptual Framework

Abstract

This initial part of the article lays the theoretical and architectural groundwork for a novel approach to digital mental health: an autonomous multi-agent system for delivering Cognitive Behavioral Therapy (CBT). We first deconstruct the core tenets of CBT into computationally tractable components, including the cognitive model, Socratic questioning, and behavioral activation. Subsequently, we introduce the paradigm of Agentic AI and argue for its suitability in modeling the dynamic, multifaceted nature of the therapeutic process. We then propose a conceptual framework, defining the roles and interactions of specialized agents—such as the Cognitive Analyzer Agent, the Socratic Dialogue Agent, and the Behavioral Strategist Agent—that collaboratively manage the therapeutic workflow. This framework serves as the blueprint for the subsequent technical design and implementation discussed in later parts of the article.

1. Introduction: The Confluence of AI and Mental Health

1.1 The Growing Need for Accessible Mental Healthcare

The global community is currently grappling with a profound mental health crisis of unprecedented scale. An estimated 1 billion individuals worldwide live with a mental disorder, making these conditions among the leading causes of ill health and disability globally.1 This burden is significant, affecting approximately 16% of the world's population, or one in six individuals, and can lead to severe consequences, including premature mortality, with some persons with disabilities dying up to 20 years earlier than those without disabilities.2

Despite this widespread prevalence, access to quality mental health services remains severely limited across the globe. A critical accessibility gap exists, particularly in low- and middle-income countries, where over 75% of individuals with mental health disorders receive no treatment for their conditions.1 This disparity in access is not merely a matter of resource scarcity but is compounded by a complex interplay of factors. These include the pervasive stigma and discrimination associated with mental illnesses, insufficient funding for mental health services, inadequate insurance coverage, and the high cost of care, all of which contribute to delays in diagnosis and treatment.1 The sheer magnitude of untreated mental health conditions points to a fundamental and persistent failure of traditional healthcare models to meet global demand. This situation is not simply a resource allocation challenge but represents a systemic incapacity of existing structures to scale to the required magnitude, thereby creating a significant void that innovative technological solutions are uniquely positioned to address.

The impact of the COVID-19 pandemic has further exacerbated this crisis, significantly worsening the global mental health landscape and intensifying the demand for already strained services.1 The pandemic introduced unprecedented hazards to mental health globally, leading to reported high rates of anxiety symptoms (ranging from 6.33% to 50.9%) and depression symptoms (from 14.6% to 48.3%) in the general population.3 Concerns about mental health remain elevated, with a striking 90% of U.S. adults believing the country is facing a mental health crisis.4 Certain vulnerable populations have been disproportionately affected, including individuals experiencing household job loss, young adults aged 18-24, women, and adolescent females, who have shown increased rates of anxiety, depression, and suicidal ideation.4 This period demonstrated that the pandemic did not solely create new mental health problems; rather, it functioned as a significant accelerant, exposing and amplifying pre-existing vulnerabilities and systemic weaknesses within mental healthcare infrastructures. The crisis acted as a critical stress test, pushing an already strained system beyond its breaking point and underscoring the urgent need for resilient, scalable alternatives, such as AI-driven solutions, that are not constrained by physical presence or traditional operational hours. Furthermore, the barriers to care extend beyond mere availability to deeply rooted social and economic factors like stigma, discrimination, and cost. This implies that any technological solution must be not only effective but also culturally sensitive, affordable, and designed to inherently mitigate these non-clinical barriers. For instance, an AI solution, by potentially offering greater anonymity and lower cost, could intrinsically reduce some of these obstacles, though careful design is required to ensure algorithmic fairness and avoid perpetuating existing biases against marginalized communities.5

1.2 The Promise of AI in Psychological Counseling

Artificial intelligence (AI) is increasingly being applied in mental health services, presenting novel solutions such as chatbots and diagnostic tools. Advanced AI techniques, including machine learning, deep learning, and semantic analysis of patient statements, enable the early diagnosis of various conditions, including psychotic disorders, ADHD, and anorexia nervosa. Digital psychotherapists and AI chatbots have demonstrated efficacy in reducing anxiety symptoms by delivering therapeutic interventions, offering accessible, cost-effective, and immediate support. These systems possess the capability to personalize interactions by adapting responses to individual user needs and are available 24/7, overcoming geographical and temporal constraints inherent in traditional care models 7 .

Despite these advantages, current AI applications in psychological counseling face significant limitations. A prominent concern is their inherent inability to replicate the nuanced empathy and genuine human connection that are crucial for establishing a strong therapeutic alliance, a cornerstone of successful psychotherapy.8 Users frequently perceive AI interactions as "cold words generated by an AI" and express doubts regarding the AI's capacity to truly comprehend complex emotional states.9 This recurring theme of an "empathy gap" is not merely a minor limitation but a fundamental design challenge. It suggests that future AI systems must either computationally approximate these human qualities or be explicitly designed to complement, rather than replace, human interaction, particularly for the intricate emotional work involved in therapy.

Further limitations include challenges in building trust, stemming from potential misinterpretations of user inputs, the risk of offering unhelpful advice, and pervasive fears regarding data misuse or leakage.8 The nascent and often fragmented regulatory landscape for AI in psychotherapy exacerbates these privacy and safety concerns, with a notable absence of comprehensive global regulations.6 Technically, current chatbots often struggle with understanding nuanced language, such as idioms or sarcasm, and accurately interpreting subtle emotional cues beyond their programmed responses.8 This restricts their ability to grasp the full spectrum of emotional complexities and respond appropriately within a therapeutic context. Moreover, most existing AI chatbots are designed for mild to moderate symptoms and may prove unsuitable for severe mental health conditions or complex comorbid disorders, which frequently necessitate comprehensive human-led assessments.8 Research also indicates potential safety risks, with some chatbots demonstrating tendencies to enable dangerous behaviors or reinforce existing stigmas.5 The identified limitations, particularly in handling complex, nuanced, and sensitive aspects of mental health, indicate that a mere "tool" approach is insufficient. A more integrated, systematic framework is required to address the multifaceted nature of therapy effectively.

Ethical considerations, such as algorithmic bias, transparency, and accountability, present substantial challenges. AI algorithms can inadvertently perpetuate biases present in their training data, potentially leading to inequitable outcomes and discrimination against certain groups.5 The lack of transparency and explainability in AI decision-making processes is a pressing ethical concern, making it difficult to understand why a particular response or recommendation was generated.10 These ethical considerations are not merely post-deployment add-ons but must be foundational to the architectural design of any autonomous mental health AI. Failure to integrate principles like "privacy-by-design," "fairness audits," and "accountability hierarchies" from the outset can lead to significant harm to users, substantial regulatory fines, and a profound loss of public trust.10 The risk of bias propagation and stigmatization underscores the necessity for meticulous model selection and continuous monitoring, capabilities that can be significantly enhanced by a modular, auditable Agentic AI and multi-agent system.

2. Typology of AI Agents and Agentic AI

The classification of AI agents has evolved significantly as the field has matured, with various taxonomies proposed to categorize different agent architectures based on their capabilities, design principles, and operational characteristics. Understanding these classifications provides a framework for analyzing the strengths, limitations, and appropriate applications of different agent types 47.

To understand AI Agents through their evolution, we can establish a structured framework that traces their progression from foundational generative models to sophisticated, collaborative multi-agent systems.

In this part we will introduce a hierarchy illustrates a conceptual and evolutionary progression, beginning with foundational principles of intelligent agents, advancing through the enabling capabilities of Generative AI, and culminating in the sophisticated, collaborative systems of Agentic AI.

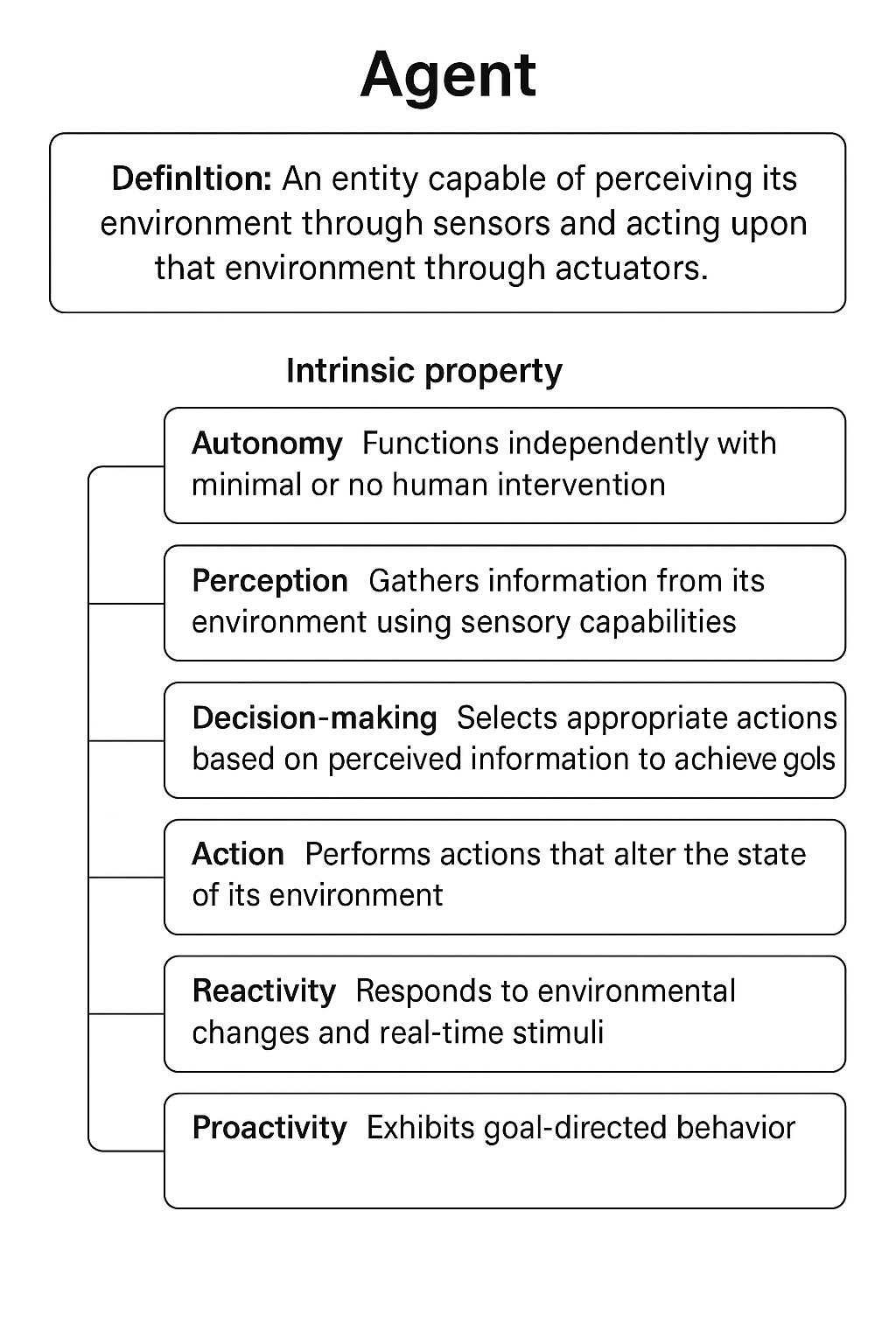

I. Foundational Concepts: Intelligent Agents (General Definition)

At the base are the core characteristics that define any intelligent agent, applicable across various forms of AI.

Definition: An entity capable of perceiving its environment through sensors and acting upon that environment through actuators. Agents possess intrinsic properties:

Autonomy: Functions independently with minimal or no human intervention.

Perception: Gathers information from its environment using sensory capabilities.

Decision-making: Selects appropriate actions based on perceived information to achieve goals.

Action: Performs actions that alter the state of its environment.

Reactivity: Responds to environmental changes and real-time stimuli.

Proactivity: Exhibits goal-directed behavior.

Social Ability: Interacts with other agents and humans.

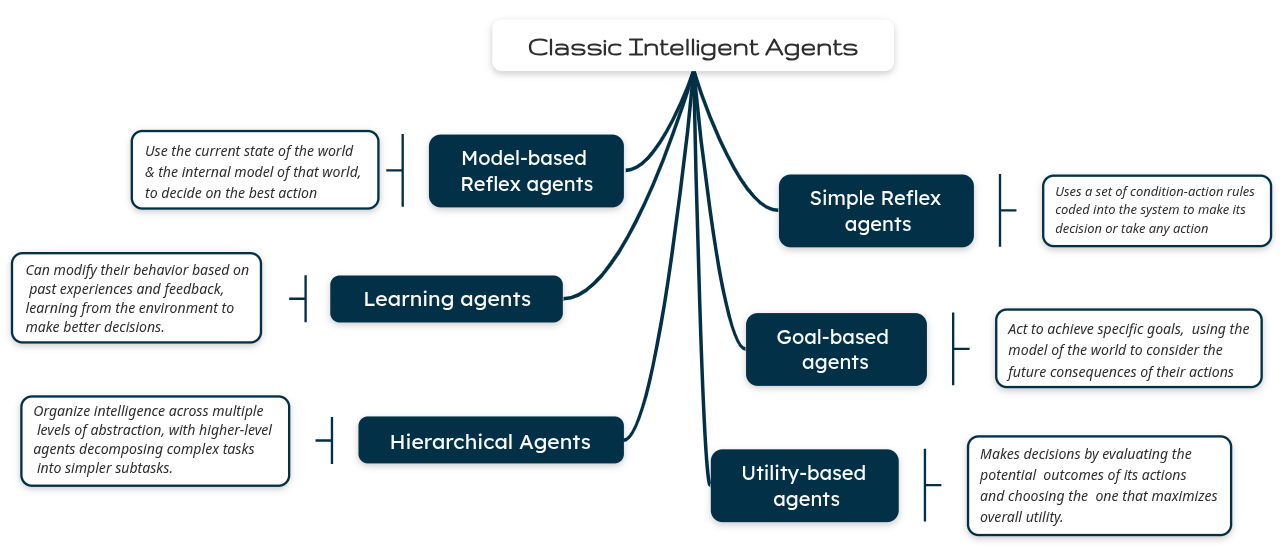

Classic Agent Types (existing along a spectrum of capabilities, often pre-dating modern LLM-driven agents but influencing their design):

Simple Reflex Agents: Operate based on predefined rules and immediate perceptual data ("if-then" statements).

Model-Based Reflex Agents: Incorporate an internal model of the world to maintain state information and evaluate probable outcomes.

Goal-Based Agents: Incorporate explicit representations of desirable world states (goals) and select actions to achieve them through means-end reasoning.

Utility-Based Agents: Use a utility function to assign values to different world states, allowing for nuanced decisions when multiple goals conflict or under uncertainty.

Learning Agents: Incorporate mechanisms to improve performance through experience by modifying behavior based on feedback. (This category includes modern LLM-based agents, which build upon this concept).

Hierarchical Agents: Organize intelligence across multiple levels of abstraction, with higher-level agents decomposing complex tasks into simpler subtasks.

Historical Context: Prior to 2022, intelligent agent development was rooted in Multi-Agent Systems (MAS) and Expert Systems, emphasizing social action and distributed intelligence. These classical systems typically had predefined rules, limited autonomy, and relied on symbolic reasoning.

Table 1. This comparison of classic Agents types illustrates how each agent type builds upon the capabilities of simpler ones, adding new dimensions of sophistication. Source

II. Enabling Technology: Generative AI (Post-2022 Foundation)

The widespread adoption of large-scale generative models (like ChatGPT in November 2022) served as a pivotal precursor, providing the foundation for more advanced agents.

A consistent theme in the literature is the positioning of generative AI as the foundational precursor to agentic intelligence. These systems primarily operate on pre-trained LLMs and LIMs, which are optimized to synthesize multi-modal content including text, images, audio, or code based on input prompts. While highly communicative, generative models fundamentally exhibit reactive behavior: they produce output only when explicitly prompted and do not pursue goals autonomously or engage in self-initiated reasoning 46.

Core Function: Primarily synthesize multi-modal content (text, images, audio, code) based on input prompts, utilizing pre-trained Large Language Models (LLMs) and Large Image Models (LIMs).

Key Traits: Fundamentally reactive, input-driven, and lack internal states, persistent memory, or autonomous goal-following mechanisms. They cannot directly act upon the environment or manipulate digital tools independently without human-engineered wrappers.

Prompt Dependency and Statelessness: Their operations are triggered by user-specified prompts; they do not retain context across interactions unless explicitly prompted, and lack intrinsic feedback loops or multi-step planning.

III. Evolution to Modern Autonomous Systems (LLM-Driven Era)

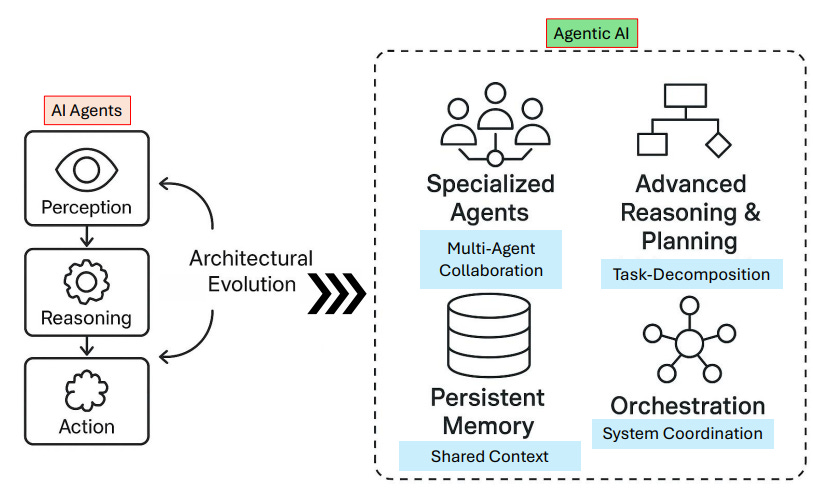

The evolution progressed through two major post-generative phases: AI Agents and Agentic AI.

1. AI Agents (LLM-based, Single-Entity Focus)

This class of systems emerged by enhancing LLMs with capabilities for external tool use, function calling, and sequential reasoning. They represent single-entity systems that perform goal-directed tasks.

The rise of LLM‑based agents marks the latest leap: by integrating large language models with modules for memory, planning, tool invocation, and real‑time data retrieval, these systems achieve richer reasoning, contextual understanding, and autonomous workflow orchestration. In practice, most modern implementations blend features across multiple paradigms, forming a continuum of capabilities tailored to diverse application domains.

Definition: Autonomous software entities engineered for goal-directed task execution within bounded digital environments, leveraging LLMs (and LIMs) as their primary decision-making and cognitive engines.

Core Characteristics:

Autonomy: Function with minimal human intervention after deployment.

Task-Specificity: Purpose-built for narrow, well-defined tasks.

Reactivity and Adaptation: Respond to real-time stimuli and can refine behavior over time through feedback loops.

Key Architectural Components:

Perception Module: Intakes and pre-processes input signals (e.g., natural language prompts, APIs, sensor streams).

Knowledge Representation and Reasoning (KRR) Module: Applies logic (symbolic, statistical, hybrid) to input data, leveraging LLMs for understanding, planning, and response generation.

Action Selection and Execution Module: Translates decisions into external actions, often by invoking tools or APIs.

Learning and Adaptation Mechanisms: Improve performance through experience and feedback, though often limited compared to Agentic AI.

Advanced Functionalities and Capabilities (Post-Generative Enhancements):

LLMs as Core Reasoning Components: LLMs like Gemini , GPT-4, PaLM, Claude, LLaMA serve as the cognitive engine, interpreting user goals, formulating plans, selecting strategies, and managing workflows.

Tool-Augmentation (Function & API Calls) : Essential for extending agent functionality beyond pre-trained knowledge or internal context. Agents generate structured outputs (e.g., JSON, SQL, Python) to call functions, query APIs for real-time data (e.g., stock prices, weather), or interact with external services. The ReAct framework exemplifies this by integrating reasoning (e.g., Chain-of-Thought or rule-based analysis), action (e.g., tool use), and observation (e.g., context updates).

Memory Architectures: Addresses limitations in long-horizon planning and session continuity by persisting information.

Short-term Memory/Working Memory: Manages transient information within the LLM's context window, often encapsulating crucial interaction history.

Long-term Memory: Stores and regulates substantial volumes of knowledge (experiential data, historical records) using external knowledge bases, databases (e.g., vector databases, knowledge graphs, relational databases), or API calls. Chunking and chaining techniques divide interactions into manageable segments for efficient storage and retrieval.

Memory Retrieval: Facilitated through online learning and adaptive modification, leveraging retrieval-augmented generation (RAG).

Rethinking/Self-Critique Mechanisms: Enables agents to evaluate prior decisions and environmental feedback, enhancing intelligence and adaptability. Includes:

In-Context Learning Methods: Leveraging prompts (e.g., Chain of Thought (CoT), Auto CoT, Zero-shot CoT, Tree of Thought (ToT), Self-consistency, Least-to-Most, Skeleton of Thought, Graph of Thought, Progressive Hint Prompting, Self-Refine) to guide reasoning and systematic problem deconstruction.

Self-Reflection (e.g., Reflexion): Computing heuristics after actions to determine if environmental reset is needed, bolstering reasoning.

Supervised/Reinforcement Learning Methods: Learning from feedback, historical experiences, and policy gradients (e.g., CoH, Process Supervision, Introspective Tips, Retroformer, REMEMBER, Dialogue Shaping, REX, ICPI).

Modular Coordination Methods: Multiple modules working in concert for planning and introspection (e.g., DIVERSITY, DEPS, PET, Three-Part System).

Agentic Loop (Reasoning, Action, Observation): Iterative process where agents reason, act, and observe results, allowing for context-sensitive behaviors and reducing errors.

Proactive Intelligence: Transitioning from reactive to initiating tasks based on learned patterns, contextual cues, or latent goals.

Environmental Interaction Capabilities: Agents interact with diverse environments:

Computer Environments: Web scraping, API calls, web searching, software interaction, database queries.

Gaming Environments: Character control, environmental interactions, state perception.

Coding Environments: Code generation, debugging, evaluation.

Real-World Environments: Data collection (sensors), device control (robot arms, drones), human-machine interaction.

Simulation Environments: Model manipulation, data analysis, optimization.

Action Capabilities: Beyond text generation, agents can perform actions or employ tools.

Tool Employment: Integrate LLM with external tools (e.g., MRKL, TALM, ToolFormer, HuggingGPT, Chameleon, RestGPT, D-Bot).

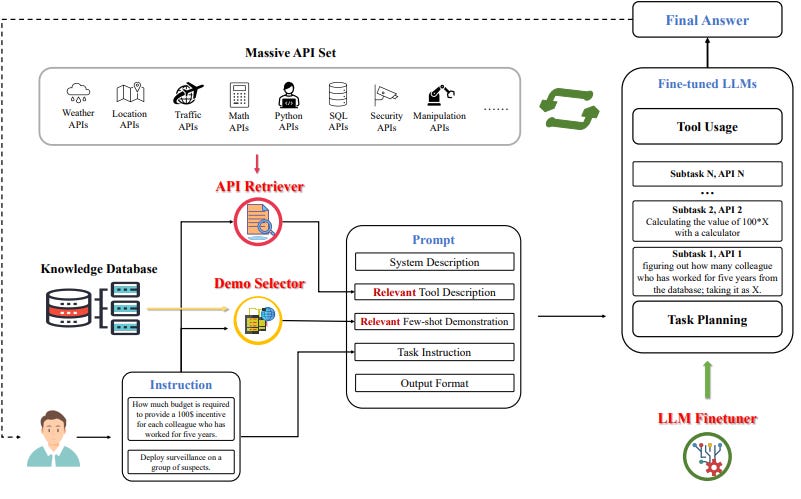

Figure 6. Chameleon approach with GPT-4 on ScienceQA [32], a multi-modal question answering benchmark in scientific domains. Chameleon is adaptive to different queries by synthesizing programs to compose various tools and executing them sequentially to get final answers. Source Tool Planning: Model chain-like thinking into multi-turn dialogues for complex tasks (e.g., ChatCoT, TPTU, ToolLLM, Gentopia).

Figutr 7. TPTU Framework .This framework is engineered to enhance the capabilities of LLMs in Task Planning and Tool Usage (TPTU) within complex real-world systems. Source Tool Creation: Generating new tools suitable for diverse tasks (e.g., Cai et al., CRAFT).

Trust & Safety Mechanisms: Verifiable output logging, bias detection, ethical guardrails for increased autonomy.

2 . Agentic AI: Paradigm Shift

The limitations inherent in single-agent AI systems and conventional chatbots have prompted a paradigm shift towards Agentic AI, which offers a more sophisticated approach to complex problem-solving. Agentic AI systems are designed to operate autonomously, adapt in real-time, and execute multi-step tasks based on defined contexts and objectives.12 These systems are typically constructed from multiple specialized AI agents that leverage large language models (LLMs) and advanced reasoning capabilities.12 Unlike single-agent systems, where a single entity attempts to manage all aspects of a task, a Agentic Ai system architecture involves multiple independent agents collaborating to achieve complex goals.12 This fundamental distinction directly addresses the challenges faced by monolithic AI models in handling the diverse and nuanced demands of psychological counseling.

This represents a paradigm shift from isolated AI Agents, enabling multiple intelligent entities to collaboratively pursue goals.

Definition: Complex, multi-agent systems in which specialized agents collaboratively decompose goals, communicate, and coordinate toward shared objectives, managing complexity through decomposition and specialization.

Enhanced Functionalities:

Ensemble of Specialized Agents: Consist of multiple agents, each assigned a specialized function or task, interacting via communication channels. Roles are often modular, reusable, and role-bound (e.g., MetaGPT, ChatDev).

Dynamic Task Decomposition: A user-specified objective is automatically parsed and divided into smaller, manageable subtasks by planning agents, then distributed across the agent network.

Orchestration Layers / Meta-Agents: Supervisory agents (orchestrators) coordinate the lifecycle of subordinate agents, manage dependencies, assign roles, and resolve conflicts. This enables flexible, adaptive, cooperative, and collaborative intelligence (e.g., ChatDev, AutoGen, CrewAI). Unified Orchestration is a future goal.

Inter-Agent Communication: Mediated through distributed communication channels (e.g., asynchronous messaging queues, shared memory buffers, intermediate output exchanges). Requires effective communication protocols (e.g., message semantics, syntax, interaction protocols, transport protocols, FIPA's ACL, Google's A2A protocol).

Shared/Persistent Memory: Agents maintain local memory while accessing shared global memory to facilitate coordination across task cycles or agent sessions. This includes central knowledge bases or shared parameters.

Multi-Role Coordination (Relationship Types): Agents engage in various relationships:

Cooperative: Focus on role and task allocation, collaborative decision-making.

Competitive: Involve designing competitive strategies, information concealment, and adversarial behavior.

Mixed: Agents balance cooperation and competition.

Hierarchical: Parent-node agents decompose tasks and assign to child-node agents, who report summarized information (e.g., AutoGen).

Multi-Agent System (MAS) Planning Types:

Centralized Planning Decentralized Execution (CPDE): A centralized LLM plans for all agents, optimizing globally.

Decentralized Planning Decentralized Execution (DPDE): Individual LLMs plan for each agent, enhancing robustness and scalability through local communication and negotiation.

Advanced Reasoning and Planning: Embeds iterative reasoning capabilities (e.g., ReAct, Chain-of-Thought (CoT) , Tree of Thoughts) allowing agents to break down complex tasks, evaluate intermediate results, and re-plan actions dynamically.

Emergent Behavior and Predictability Management: Arise from interactions of autonomous agents; managing unintended outcomes, system instability (infinite planning loops, deadlocks).

Scalability: Multi-agent scaling enables specialized agents to operate concurrently under distributed control.

Ethical Governance: Crucial for ensuring agent collectives operate within aligned moral and legal boundaries, defining accountability structures, verification mechanisms, and safety constraints. This includes Accountability for distributed decisions and Role Isolation to prevent rogue agents.

Simulation Planning: Core capability enabling agent collectives to model hypothetical decision trajectories, forecast consequences, and optimize actions through internal trial-and-error.

Domain-Specific Systems: Tailored for specific sectors (e.g., law, medicine, logistics, climate science) to leverage contextual specialization.

Absolute Zero: Reinforced Self-play Reasoning with Zero Data (AZR): Reimagines learning by enabling agents to autonomously generate, validate, and solve their own tasks without external datasets, facilitating self-evolving and self-improving ecosystems.

This hierarchy demonstrates that AI Agents are sophisticated single-task automators, while Agentic AI represents a more complex, coordinated, and collaborative ecosystem of these agents, designed to tackle even grander, more dynamic challenges.

Case Study:

Clinical decision support in hospital ICUs through synchronized agents

for diagnostics, treatment planning, and EHR analysis, enhancing safety and workflow efficiency.

A research system where agents retrieve literature, summarize findings, and draft reports collaboratively, with a meta-agent overseeing the process.

Agentic AI uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems.

3. Deconstructing Cognitive Behavioral Therapy (CBT) for Computational Modeling

3.1 The Theoretical Bedrock: The Cognitive Model

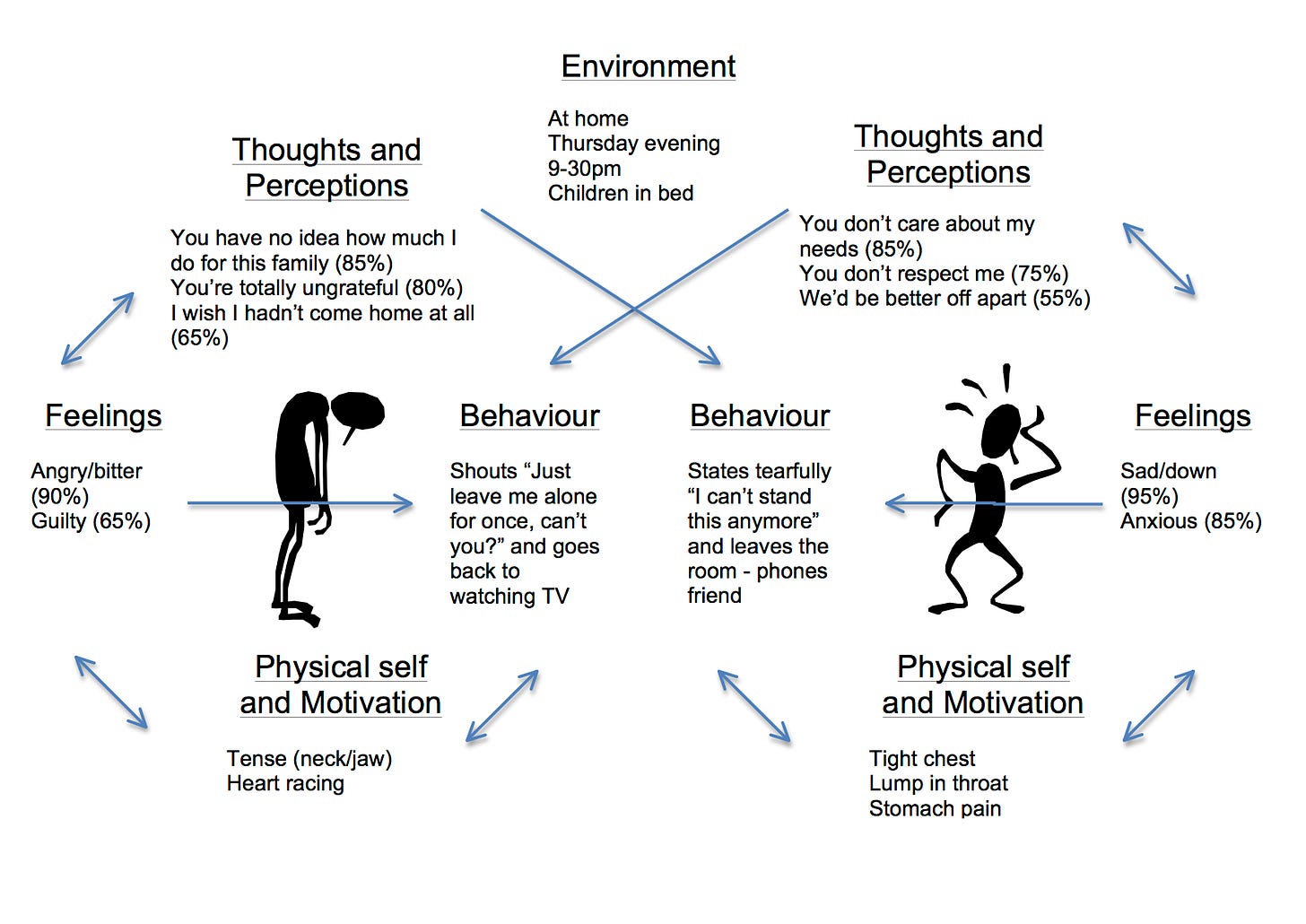

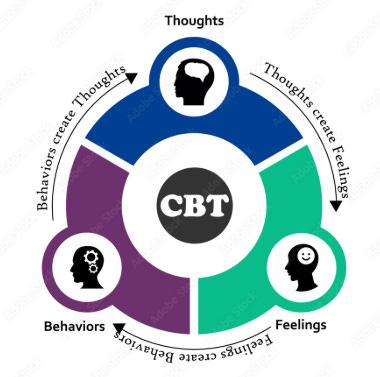

Cognitive Behavioral Therapy (CBT) is deeply rooted in the principle that an individual's thoughts, feelings, and behaviors are inextricably interconnected and exert mutual influence over one another.15 A central tenet of CBT posits that dysfunctional thinking, which directly impacts an individual's mood and behavior, is a common element across various psychological disturbances.16 The overarching objective of CBT is to alleviate symptoms by actively challenging and modifying these cognitive distortions—maladaptive thoughts, beliefs, and attitudes—and their associated unhelpful behaviors. This process ultimately aims to improve emotional regulation and equip individuals with effective coping strategies.18

The "CBT triangle," or sometimes "diamond," serves as a pivotal visual and conceptual tool within the therapeutic process, effectively illustrating this fundamental interconnectedness.15 This model typically comprises three primary components:

Thoughts (Cognition): These encompass an individual's interpretations, beliefs, and internal dialogue concerning themselves, others, and their surrounding environment.15 Within the CBT framework, thoughts are regarded as "products of the mind" that significantly influence both emotions and behaviors.16

Emotions (Feelings): This component includes both physiological sensations and psychological responses, such as fear, sadness, or joy, that arise in response to thoughts and situations.15

Behaviors: These refer to the observable actions or avoidance responses that are a direct consequence of an individual's thoughts and emotions.15

The model emphasizes that a change in any one of these elements will inevitably impact the others, thereby providing multiple entry points for therapeutic intervention.16 From a computational modeling perspective, this cognitive triangle can be conceptualized as a foundational data structure. Each node (representing a thought, emotion, or behavior) can store various attributes, and the edges connecting them represent the causal or influential relationships. This structured representation enables an AI system to systematically "map out the interactions" 15 within a user's reported experience.

The inherent structure of the cognitive model lends itself remarkably well to computational representation. For instance, graph databases are specifically designed to store and query complex data relationships, making them an ideal choice for this purpose. In such a system, entities like thoughts, emotions, and behaviors can be represented as nodes, and the relationships between them as edges.19 This architecture facilitates efficient storage and querying of how these components interact dynamically. Furthermore, the development of specialized ontological structures can be orchestrated to assess human "thoughts" in accordance with established CBT guidelines.20 These ontological structures can be complemented by knowledge elicitation algorithms designed to reason over the knowledge constructs embedded within the ontology.20 This approach moves beyond simple textual analysis to a more profound, structured understanding of the user's cognitive state, effectively serving as the core "mind" of the CBT AI. The cognitive triangle is not merely a static diagram but represents a dynamic system where changes in one component ripple through others. For an AI, this implies the necessity of a model that can track state changes, infer causality, and predict outcomes based on user input, rather than simply classifying isolated thoughts. This requires a dynamic computational model, potentially a state machine or a graph-based system, capable of traversing and updating relationships in real-time. The use of knowledge graphs and ontological structures for representing complex relationships is a crucial technical consideration. This suggests that the AI's understanding of CBT principles and the user's specific cognitive patterns should be stored in a highly structured, interconnected format, enabling sophisticated reasoning that goes beyond what simple large language model (LLM) fine-tuning might offer.

3.2 Key Therapeutic Components

3.2.1 Cognitive Restructuring

Cognitive restructuring is a fundamental technique within CBT aimed at systematically challenging and modifying Negative Automatic Thoughts (NATs) and other unhelpful thinking patterns.21 This process involves a structured sequence of steps:

Identifying Automatic Negative Thoughts (NATs): These are intrusive, often distressing thoughts that arise spontaneously in response to specific situations and significantly influence an individual's emotional state and behavior.21

Challenging These Thoughts: This critical step involves evaluating the evidence for and against the validity of the thought. It prompts individuals to ask questions such as, "Is this thought really true?" or "What evidence do I have to support this thought?".21

Reframing Negative Thoughts: The final step involves replacing the identified negative thought with a healthier, more balanced, or constructive perspective.21 This reframe shifts the focus from perceived limitations to potential opportunities; for example, changing "I can't do this" to "I can learn to do this".23

For an AI system, learning to identify Negative Automatic Thoughts (NATs) can be achieved through advanced Natural Language Processing (NLP) techniques. NLP focuses on the intricate connection between thoughts, language, and behaviors, making it possible to identify limiting beliefs and recurring negative self-talk patterns.23 This process involves:

Awareness and Identification: Training the AI to recognize the presence of negative self-talk and recurring thought patterns in user input, mimicking how humans might identify these through journaling or self-assessment.23

Semantic Analysis: Analyzing the meaning, sentiment, and linguistic structure of user statements to detect expressions indicative of NATs. This includes recognizing extreme terms such as "always," "never," "everybody," or "nobody," which often signal overgeneralization or other distortions.24

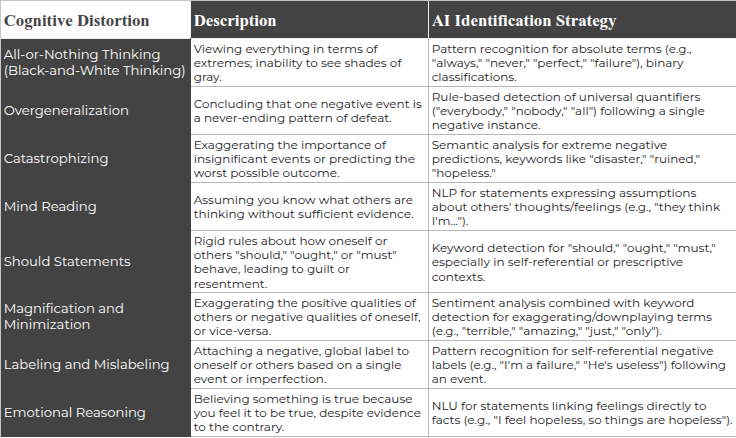

Recognizing cognitive distortions involves defining common distortions in a format amenable to AI processing, either through rule-based systems or pattern-recognition algorithms. Cognitive distortions are defined as faulty, irrational beliefs and thinking patterns that can lead to psychological distress.26 AI systems can be developed to automatically detect and classify these distortions using various machine learning techniques.27

Rule-Based Systems: Explicit rules can be defined for identifying common distortions. For instance, "All-or-nothing thinking," also known as "black-and-white thinking," can be identified by keywords or phrases that express absolute extremes.26 Similarly, "Should statements" can be flagged by the presence of words like "should," "ought," or "must".26

Pattern-Recognition Algorithms: Machine learning models, including deep learning and neural networks, can be trained to detect regularities and recurring structures in textual data that correspond to specific cognitive distortions.27 This involves:

Feature Extraction: Identifying relevant linguistic features from user input, such as specific words, phrases, grammatical structures, or overall sentiment.29

Classification: Employing supervised learning models trained on meticulously annotated datasets of real-world cognitive distortion event recollections and therapy logs to classify text into predefined distortion categories.27 Research has already demonstrated successful attempts at detecting and classifying up to 15 different cognitive distortions from text using machine learning techniques.27

The highly structured nature of cognitive restructuring, with its clear, sequential steps (identify, challenge, reframe) and identifiable patterns (NATs, cognitive distortions), makes it particularly amenable to algorithmic implementation. This component of CBT stands out as a strong candidate for early and effective AI automation within a multi-agent system. The existence of "thought records" and "worksheets" 21 further reinforces this structured approach, translating into a clear workflow for an AI: receive input, apply NLP and machine learning models to identify patterns (NATs, distortions), and then generate appropriate prompts or suggestions for reframing. The availability of novel datasets specifically annotated for cognitive distortions 28 is a critical enabler, shifting the detection of these complex psychological constructs from potentially brittle rule-based methods to more nuanced and accurate data-driven machine learning approaches. This means the AI's ability to spot distortions will not solely rely on pre-programmed rules but will learn from a diverse range of human expressions of distorted thinking, enhancing its robustness and adaptability, directly supporting the feasibility of a Cognitive Analyzer Agent.

3.2.2 Socratic Questioning

Socratic questioning, a cornerstone of CBT, is far more than a simple sequence of inquiries; it is a sophisticated, goal-oriented dialogue strategy aimed at guided discovery.25 Originating from the ancient Greek philosopher Socrates, this method compels individuals to think critically, examine their beliefs, and arrive at their own conclusions, rather than being passively provided with answers.25 In CBT, Socratic questioning serves to help individuals gain deeper insight into their own thoughts by prompting objective reflection through a structured inquiry. It facilitates a dialogue between the individual and the therapist (or, in this context, the AI) to challenge distorted thinking and scrutinize the underlying assumptions of their beliefs.25 The objective is to foster user insight, not to dispense direct answers, thereby empowering the individual to uncover patterns and pathways to solutions.25

Implementing Socratic questioning effectively within an AI system presents significant challenges. Traditional AI chatbots, often relying on rule-based algorithms, tend to provide prescripted responses, which can feel unnatural and lack the dynamic adaptability of human therapeutic dialogue.31 Early attempts at AI-powered Socratic dialogue, such as Socrates 1.0, encountered issues including overly elaborate or verbose responses that disrupted the natural flow of conversation, and a tendency to lose focus or "forget its role" during longer exchanges.31 A particularly complex challenge for AI is determining when a user's belief has been sufficiently explored or modified to conclude a dialogue, a skill human therapists intuitively possess but is difficult to formalize computationally.31 The persistent concern regarding AI's "lack of empathy and genuine human connection" 9 is also highly relevant here, as Socratic dialogue relies heavily on rapport and sensitive guidance. Users may perceive AI interactions as "cold words generated by an AI" and doubt its capacity to truly understand their complex emotional states, which could impede the effectiveness of the guided discovery process.9

Despite these complexities, advancements in multi-agent AI offer promising avenues. The development of Socrates 2.0, a multi-agent LLM-based tool, provides a compelling example. This system features an AI therapist supported by an invisible AI supervisor and an AI external rater.31 This multi-agent architecture appeared to mitigate common LLM issues like looping and significantly improved the overall dialogue experience.31 This demonstrates that a single LLM struggles with the meta-cognition and adaptive control required for effective Socratic dialogue, necessitating a distributed intelligence approach where different agents contribute to the dialogue's quality and adherence to therapeutic goals. The human insight involved in guiding the Socratic method, augmented by AI's precision in language analysis and pattern recognition, can surface unstated assumptions, detect subtle signals of resistance, and reveal deeper implications in user dialogue.32 This collaborative approach aims to layer clarity, precision, and depth onto human-led inquiry, enabling the AI to challenge arrogance in knowledge, question the validity of assertions, and encourage reflection on knowledge limits without direct confrontation.33

3.2.3 Behavioral Activation & Experiments

Behavioral Activation (BA) is a third-generation behavior therapy, often integrated within a CBT framework, primarily focused on improving mood through active engagement and planning of potentially mood-boosting activities.34 It operates on the principle that changing behavior first can lead to improvements in emotions, particularly by breaking the harmful cycle where reduced activity exacerbates low mood and increases avoidance.35 BA also involves understanding an individual's specific avoidance behaviors and employing methods to overcome them, utilizing positive reinforcements to increase adaptive behaviors and reduce negative outcomes associated with avoidance.34

The structured nature of BA lends itself well to computational approaches. Key steps in BA, such as tracking behavior, identifying energy drains, brainstorming pleasurable activities, and scheduling them, are inherently algorithmic.36 Behavioral activation worksheets, for instance, provide a practical framework for identifying values, setting goals, planning activities, and tracking progress, transforming abstract therapeutic concepts into concrete, actionable steps.37 For an AI system, this translates into distinct computational tasks:

Activity Monitoring: The AI can facilitate the logging of daily activities, along with mood ratings (e.g., on a scale of 1-10) and a sense of pleasure or mastery for each activity.35 This self-monitoring helps the AI identify patterns, such as activities that correlate with improved mood or increased mastery.35

Values-Based Planning and Goal Setting: The AI can guide users through exercises to clarify their core values (e.g., relationships, creativity, health) and then assist in translating these values into specific, achievable behavioral goals.35 This involves brainstorming activities that align with identified values and breaking down larger goals into manageable steps.35

Activity Scheduling: A crucial aspect of BA is the intentional scheduling of pleasurable and mastery-oriented activities, even when motivation is low.35 An AI agent can manage this scheduling, integrate it into the user's calendar, and provide reminders, taking the "in-the-moment choice out of the equation".36 This also includes identifying and helping eliminate "energy drains"—activities that provide no real purpose or pleasure.36

Behavioral Experiments: These involve testing whether negative thoughts or beliefs are accurate by taking real-world actions.21 For example, if a user believes, "If I ask for help, people will think I'm weak," the AI could propose an experiment to test this belief.21 The AI can help design these experiments, track their execution, and evaluate the outcomes, thereby facilitating cognitive restructuring through direct experience.15

Avoidance Pattern Identification: The AI can assist in recognizing and disrupting patterns of avoidance or withdrawal, exploring the underlying reasons, and suggesting alternative coping methods.35

The algorithmic nature of behavioral activation, particularly its reliance on tracking, planning, and scheduling, makes it highly amenable to AI implementation. The components of BA worksheets, such as activity tracking, values-based planning, and goal setting, provide direct inputs for an AI agent. The concept of "graded task assignment" implies an iterative and adaptive process that an AI system is well-suited to manage. The challenge for AI in this domain lies in truly understanding the subjective "meaning" of pleasure and mastery for an individual, rather than merely scheduling activities, requiring a nuanced understanding of user preferences and progress.

3.3 The Structured Nature of CBT Sessions

Cognitive Behavioral Therapy sessions typically follow a clear and consistent structure, ensuring both the patient and therapist are aligned on expectations and therapeutic progression.38 This structured nature is a key factor that lends itself exceptionally well to an algorithmic workflow managed by an AI agent. A typical CBT session often includes several distinct phases:

Mood Check-ins: Sessions frequently begin with a brief assessment of the client's mood and emotional state since the last interaction. Ideally, this involves the client completing a questionnaire beforehand or providing a simple rating of their mood.38

Agenda Setting: A collaborative process where the therapist and client jointly decide on the most important topics or problems to cover during the session, prioritizing based on severity or immediate relevance.38 This ensures the session remains focused and goal-oriented.

Reviewing Homework: A significant portion of the session is dedicated to reviewing previously assigned "homework" or behavioral experiments. This allows for discussion of challenges, successes, and insights gained from real-world application of learned skills.38

Introducing New Concepts/Skills: New therapeutic concepts, cognitive restructuring techniques, or behavioral strategies are introduced and practiced within the session.38

Assigning New Homework/Action Plan: Before concluding, new "homework" assignments or an action plan for the coming week are collaboratively set. This ensures the client has concrete tasks to work on and experiment with outside the session.38

Eliciting Feedback and Summary: Sessions often conclude with the therapist asking the client for a summary of what they learned or found most helpful, and soliciting feedback on the session itself to facilitate continuous improvement.38

This inherent structure of CBT sessions, with its sequential tasks and clear objectives, is highly conducive to algorithmic representation and management. The consistent flow and modular components allow for the design of a state machine or a defined workflow that an orchestrator agent can manage. The concept of "modular procedures" and "algorithm-based modular psychotherapy" 40 directly supports the multi-agent system (MAS) approach, where different agents can be responsible for managing specific "modules" or stages of the session. For example, one agent could handle the mood check-in and agenda setting, another could guide the review of homework, and yet another could introduce new concepts. The therapist's role in setting agendas, assigning homework, and eliciting feedback can be directly mapped to the functions of specialized agents within the MAS, particularly an orchestrator agent that directs the overall therapeutic flow. This structured nature is a key enabler for computational modeling, allowing an AI system to provide a coherent, consistent, and effective therapeutic experience that mirrors the best practices of human-delivered CBT. The ability to personalize CBT strategies and optimize treatment delivery through AI-based tools further emphasizes how this structured approach aligns with the capabilities of advanced computational systems.41

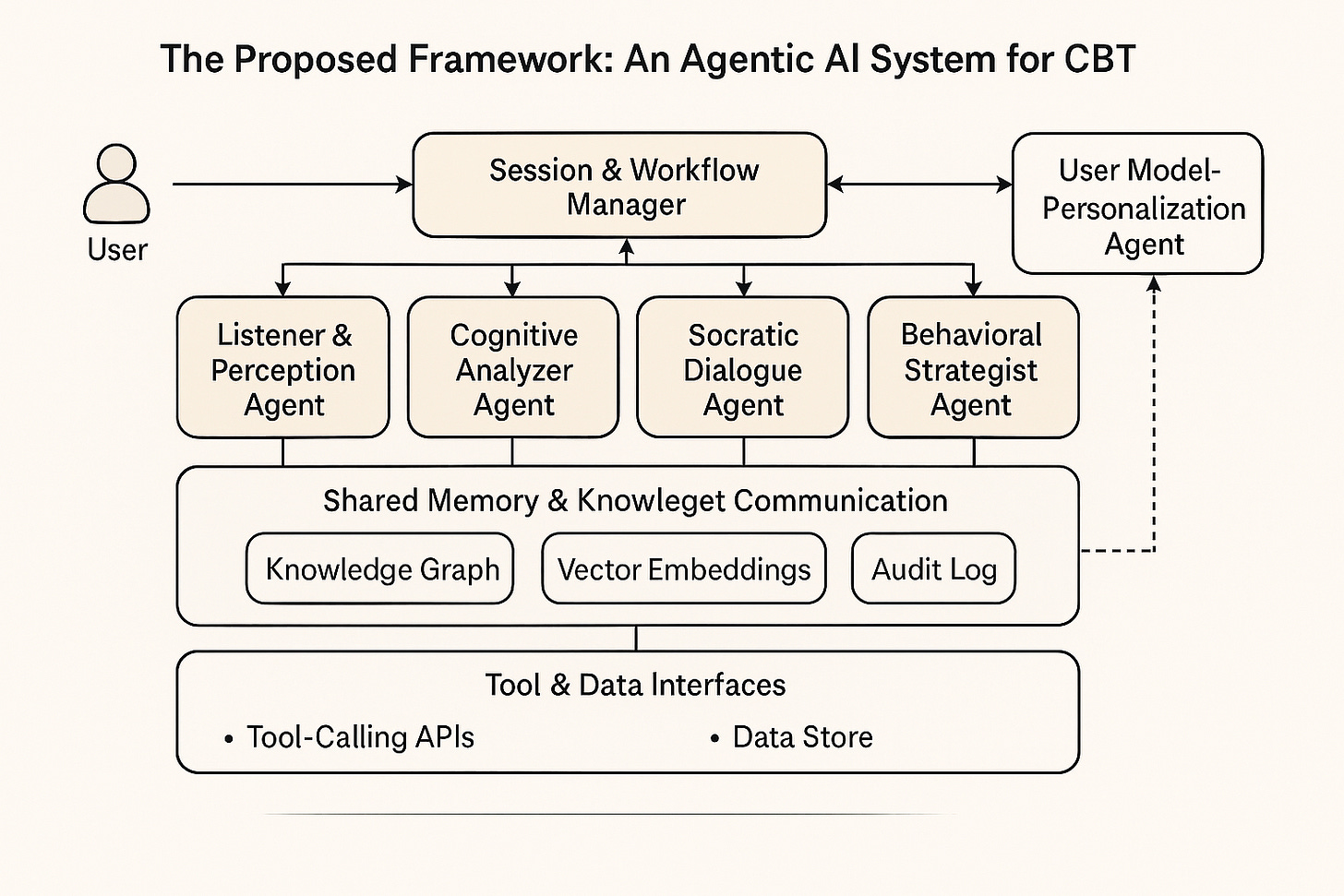

4. The Proposed Framework: An Agentic AI System/ MAS for CBT

The Autonomous Multi-Agent System for Cognitive Behavioral Therapy is a state-of-the-art framework designed to deliver personalized, scalable, and effective Cognitive Behavioral Therapy (CBT) through a collaborative network of specialized AI agents. This section expands on the high-level architecture and agent roles provided in the original article, detailing critical design aspects necessary for a robust, ethically sound, and user-centric system. These include communication protocols, data flow, knowledge representation, learning mechanisms, ethical considerations, user interaction, scalability, integration, and safety mechanisms. The framework serves as a comprehensive blueprint for the technical implementation in Part 2, leveraging recent advancements in AI, such as large language models (LLMs) and multi-agent systems, to address the global mental health crisis.

4.1 High-Level Architecture

The Agentic AI-CBT system adopts an orchestrator-worker pattern, where a central Session & Workflow Manager Agent coordinates the activities of specialized subagents. This hybrid architecture balances centralized control with distributed specialization, ensuring modularity, scalability, and a seamless user experience. The user interacts with a unified interface that conceals the complexity of the multi-agent system, mimicking the natural progression of a human therapy session. The Session & Workflow Manager Agent acts as the "therapist's mind," maintaining session state, setting agendas, managing timing, and directing information flow among agents. The system can be visualized as a network of interconnected AI entities, with information flowing from user input through various agents to produce tailored therapeutic responses.

4.2 Defining the Specialist Agents

The Agentic AI-CBT framework comprises specialized agents, each replicating a key function of a human CBT therapist. Their roles ensure optimized performance in specific therapeutic tasks, contributing to the system's overall effectiveness.

4.2.1 The Listener & Perception Agent

Role: Primary interface for capturing and interpreting user input.

Responsibilities:

Employs advanced Natural Language Understanding (NLU) to parse text and extract semantic meaning, leveraging LLMs like GPT-4 for robust language processing.

Conducts sentiment analysis to assess emotional tone (e.g., distress, progress), using models trained on mental health datasets.

Provides contextually rich, emotionally attuned input for downstream agents.

Significance: Its accuracy in understanding user input sets the foundation for effective therapeutic responses, ensuring the system captures emotional nuances critical to CBT.

4.2.2 The Cognitive Analyzer Agent

Role: Identifies Automatic Negative Thoughts (NATs) and cognitive distortions.

Responsibilities:

Uses a CBT knowledge base and machine learning models (e.g., fine-tuned BERT models) to detect patterns like "all-or-nothing thinking" or "catastrophizing" based on linguistic cues.

Classifies distortions with high precision, drawing on annotated mental health text datasets.

Outputs identified distortions to guide subsequent interventions.

Output: Feeds results to the Socratic Dialogue Agent for further exploration, ensuring targeted therapeutic conversations.

4.2.3 The Socratic Dialogue Agent

Role: Facilitates guided discovery through reflective questioning, a core CBT technique.

Responsibilities:

Generates open-ended questions to challenge distortions and promote self-insight, using LLMs for natural and empathetic dialogue.

Maintains conversational focus, adapting to user responses while avoiding redundancy or looping, inspired by systems like Socrates 2.0.

Incorporates internal supervision mechanisms to refine dialogue quality, ensuring therapeutic nuance and alliance.

Design Note: May leverage techniques like constrained generation to ensure responses align with CBT principles, enhancing dialogue effectiveness.

4.2.4 The Behavioral Strategist Agent

Role: Designs and tracks behavioral interventions to support the cognitive triangle.

Responsibilities:

Proposes homework, behavioral activation exercises, and goal-setting activities tailored to user needs.

Monitors completion and evaluates impact on mood and mastery, using feedback loops to refine suggestions.

Personalizes interventions based on data from the User Model & Personalization Agent.

Output: Translates cognitive insights into actionable behavior changes, fostering practical progress.

4.2.5 The User Model & Personalization Agent

Role: Maintains a dynamic user profile for tailored therapy.

Responsibilities:

Tracks progress, preferences, recurring themes, and intervention responses, storing data in a structured knowledge graph.

Updates the user model in real-time to inform personalization across sessions.

Adapts therapeutic strategies to individual needs, enhancing engagement and efficacy.

Significance: Ensures the system remains relevant and effective by customizing interventions to the user’s unique profile.

4.2.6 The Session & Workflow Manager Agent

Role: Orchestrates the entire system to ensure a coherent therapeutic experience.

Responsibilities:

Sets session agendas collaboratively with the user, prioritizing key issues.

Manages timing and transitions between agents to maintain session flow.

Ensures a structured, evidence-based workflow, mirroring human CBT sessions.

Concludes sessions with homework prompts and feedback collection.

Analogy: Acts as the central intelligence, directing all agents toward therapeutic goals.

4.3 Communication and Coordination

The system employs a blackboard architecture to facilitate efficient inter-agent communication and coordination. In this setup, a shared memory space (the blackboard) allows agents to post outputs and read inputs asynchronously, minimizing dependencies and enhancing modularity. The Session & Workflow Manager Agent oversees the blackboard, activating agents based on session state and therapeutic objectives. Messages are standardized with metadata (e.g., agent ID, timestamps) to ensure clarity and interoperability. This architecture supports seamless collaboration, allowing agents to operate independently while contributing to a unified therapeutic process. For example, the Listener & Perception Agent posts processed user input to the blackboard, which the Session & Workflow Manager Agent uses to trigger the Cognitive Analyzer Agent.

4.4 Knowledge Representation

The system leverages multiple forms of knowledge representation to support its operations:

CBT Knowledge Base: A structured ontology encodes CBT principles, including cognitive distortions, their linguistic markers, and corresponding therapeutic techniques. This enables agents like the Cognitive Analyzer to make informed decisions.

User Profile: Maintained by the User Model & Personalization Agent, this is represented as a knowledge graph capturing the user’s cognitive patterns, emotional states, and intervention history. The graph structure facilitates efficient querying and updating.

Machine Learning Models: Agents use pre-trained models (e.g., BERT Variants for distortion classification) and LLMs for dialogue generation trained on mental health datasets(e.g., unsloth_Gemma 3) , ensuring high accuracy and relevance.

These representations provide a robust foundation for the system’s decision-making and personalization capabilities.

4.5 Learning and Adaptation

To remain adaptive and improve over time, the system incorporates learning mechanisms at multiple levels:

Agent-Level Learning: Individual agents refine their models based on user feedback and new data. For example, the Cognitive Analyzer Agent improves distortion detection accuracy through supervised learning on annotated datasets.

System-Level Learning: The Session & Workflow Manager Agent employs reinforcement learning to optimize session flow and agent activation strategies, using metrics like user progress and satisfaction.

Personalization: The User Model & Personalization Agent uses unsupervised learning (e.g., clustering) to identify patterns in user behavior, tailoring interventions to individual needs.

These mechanisms ensure the system evolves, enhancing its effectiveness and relevance over time.

4.6 Ethical and Safety Considerations

Given the sensitive nature of mental health care, the CBT system prioritizes ethical and safety considerations:

Data Privacy: All user data is encrypted and stored securely, adhering to standards like GDPR and HIPAA. Access controls ensure only authorized agents can interact with specific data.

Crisis Detection and Response: The Listener & Perception Agent is trained to detect signs of crisis (e.g., suicidal ideation) using pattern recognition, triggering predefined protocols to alert human professionals or provide emergency resources.

Bias Mitigation: Machine learning models are trained on diverse datasets and audited regularly to minimize biases, ensuring equitable treatment across user demographics.

Transparency: The system provides explanations for its actions (e.g., why a question was asked), enhancing user trust and understanding.

Human Oversight: Designed to complement human therapists, the system includes options for human review and intervention, particularly in complex cases.

These measures ensure responsible deployment, aligning with ethical guidelines for AI in mental health.

4.7 User Interface and Interaction

The system features a text-based chat interface designed to be intuitive and empathetic, hiding the multi-agent complexity from the user. Key features include:

Natural Language Processing: Advanced NLP, powered by LLMs, ensures contextually appropriate and emotionally attuned responses.

Asynchronous Support: The system sends reminders for homework and checks in on user progress between sessions, enhancing engagement.

Emergency Information: Clear disclaimers and links to crisis resources are integrated, ensuring users know when to seek human help.

Extensibility: The architecture is flexible to accommodate future multimodal inputs (e.g., voice, video), leveraging models like GPT-4o for enhanced interaction.

This design prioritizes user experience, making therapy accessible and supportive.

4.8 Integration with Existing Systems

For deployment in healthcare settings, the system supports integration with electronic health records (EHRs) and other medical systems. It adheres to standards like HL7 and FHIR for seamless data exchange, enabling coordination with human therapists and healthcare providers. This integration ensures the system can operate within existing mental health ecosystems, enhancing its practical utility.

4.9 Safety Mechanisms

To ensure the safety and appropriateness of responses, the system incorporates advanced safety mechanisms:

Output Filtering: Responses from the Socratic Dialogue Agent and others are passed through filters to detect potentially harmful content, using predefined criteria and AI-based checks.

Therapeutic Alignment: A dedicated model, trained on CBT literature and therapist feedback, evaluates responses for alignment with therapeutic best practices, inspired by systems like Socrates 2.0.

User Feedback Loop: Users can rate the helpfulness of responses, providing data to refine the system’s behavior via reinforcement learning.

Escalation Protocols: Critical situations trigger immediate escalation to human professionals, with clear pathways for intervention.

These mechanisms safeguard users and maintain the system’s therapeutic integrity.

4.10. Hypothetical Interaction Scenario

[Existing content from the attachment, included for completeness]

User Input: The user initiates a session by expressing a negative thought, stating, “I failed my exam, I’m a complete failure.”

Listener & Perception Agent Processing: The Listener & Perception Agent receives this input, performs NLU to parse the statement, and conducts sentiment analysis, identifying a strong negative sentiment and self-deprecating tone. It posts this processed information to the blackboard.

Session & Workflow Manager Agent Activation (Cognitive Analysis): Recognizing a potentially maladaptive thought, the Session & Workflow Manager Agent activates the Cognitive Analyzer Agent, providing the processed statement.

Cognitive Analyzer Agent Identification: The Cognitive Analyzer Agent identifies “all-or-nothing thinking” and “labeling” in the statement, returning these classifications to the blackboard.

Session & Workflow Manager Agent Activation (Socratic Dialogue): The Session & Workflow Manager Agent activates the Socratic Dialogue Agent, providing the identified distortions.

Socratic Dialogue Agent Engagement: The Socratic Dialogue Agent asks reflective questions like, “What evidence do you have that this single exam defines you as a complete failure?” to foster self-reflection.

User Model & Personalization Agent Update: The User Model & Personalization Agent updates the user profile with the identified distortions and dialogue outcomes.

Behavioral Strategist Agent Activation (Conditional): If the user gains insight but needs action, the Behavioral Strategist Agent suggests a study schedule or stress-reduction techniques.

Conclusion

The Agentic-AI-CBT framework represents a state-of-the-art approach to delivering CBT through a multi-agent system, integrating advanced AI technologies with a modular and scalable design. By addressing communication, data flow, knowledge representation, learning, ethics, and user interaction, the framework provides a comprehensive blueprint for implementation in Part 2. It leverages insights from recent advancements, such as multi-agent systems like Socrates 2.0, to ensure effective, safe, and personalized digital therapy, addressing the global mental health crisis with innovation and responsibility.

5. Conclusion for Part I and Bridge to Part II and III

Summary of the Framework

This initial part of the article has laid the foundational theoretical and architectural groundwork for an autonomous multi-agent system designed to deliver Cognitive Behavioral Therapy in psychological counseling. The analysis commenced by underscoring the pressing global mental health crisis, characterized by a profound accessibility gap exacerbated by factors such as stigma, cost, and the enduring impact of events like the COVID-19 pandemic. Traditional mental healthcare models are demonstrably insufficient to meet this escalating demand, necessitating innovative, scalable solutions. While existing AI applications in mental health offer promising avenues, their limitations—particularly concerning empathy, nuanced understanding, and ethical considerations—highlight the need for a more sophisticated approach.

The article then introduced the paradigm of Agentic AI and Multi-Agent Systems (MAS) as a compelling solution. The inherent advantages of MAS, including modularity, specialization, scalability, and robustness, position it as an ideal framework for modeling the complex, multifaceted nature of the therapeutic process. By deconstructing core CBT components—the cognitive model, Socratic questioning, and behavioral activation—into computationally tractable elements, a clear pathway for their algorithmic implementation was established. The cognitive model, with its interconnected thoughts, feelings, and behaviors, can be effectively represented using knowledge graphs and ontological structures, forming the core "mind" of the AI. Cognitive restructuring, with its structured steps for identifying, challenging, and reframing maladaptive thoughts, is highly amenable to AI automation through NLP and machine learning techniques, supported by annotated datasets. Similarly, the structured, goal-oriented nature of Socratic questioning and the algorithmic steps of behavioral activation lend themselves to specialized agent functionalities.

The proposed framework outlines a high-level architecture centered around a "Session & Workflow Manager Agent" that orchestrates the collaboration of specialized agents: the Listener & Perception Agent, Cognitive Analyzer Agent, Socratic Dialogue Agent, Behavioral Strategist Agent, and User Model & Personalization Agent. This architecture ensures distributed expertise, improved coherence in therapeutic flow, and greater adaptability to individual user needs. The core argument reiterated is that a multi-agent architecture provides a robust, theoretically grounded, and computationally feasible model for autonomous CBT, offering a scalable and personalized approach to digital mental health.

Challenges and Future Directions

While the proposed Agentic AI-CBT framework offers significant promise, its development and deployment are not without substantial challenges that warrant careful consideration and continued research.

Ethical Considerations: The ethical landscape of autonomous AI in mental health is complex and paramount. Concerns revolve around data privacy and security, given the highly sensitive nature of psychological data.6 Robust encryption, secure storage, and transparent privacy policies are non-negotiable requirements, along with explicit and purpose-bound informed consent from users.10 Bias and fairness are also critical; AI algorithms can inadvertently perpetuate biases present in their training data, leading to inequitable or stigmatizing outcomes, particularly for marginalized communities.5 Mitigation strategies, such as diverse training datasets, fairness audits, and bias-aware ML algorithms, must be integrated from the outset.10 Furthermore, issues of accountability and responsibility for AI-generated responses remain complex, especially given the lack of interpretability in deep neural networks.10 Clear responsibility hierarchies, audit trails, and human-in-the-loop (HITL) or human-on-the-loop (HOTL) safeguards are essential to ensure human oversight and accountability.10 The system must always acknowledge its non-human nature and be designed to complement, not replace, human therapists, particularly for severe conditions or crisis situations.8

Complexity of Capturing Therapeutic Nuance: Replicating the full spectrum of human empathy, genuine connection, and the subtle nuances of therapeutic rapport remains a formidable challenge for AI.8 AI chatbots often struggle with understanding complex emotional cues, idioms, and sarcasm, which are integral to effective human communication in therapy.9 While a multi-agent approach can distribute expertise, achieving a holistic, empathetic interaction that fosters trust and therapeutic alliance will require significant advancements in AI's emotional intelligence and contextual understanding.

Need for Rigorous Testing and Validation: Before widespread deployment, the systems will require rigorous empirical validation through extensive clinical trials.6 This includes assessing efficacy, safety, user adherence, and long-term outcomes across diverse populations and cultural contexts.6 Testing must go beyond symptom reduction to evaluate the system's ability to foster genuine insight, build coping skills, and prevent relapse. The development of comprehensive regulatory frameworks and ethical guidelines for AI in psychology is urgently needed to ensure safe and responsible deployment.6

Preview of Part II and III

The development of an autonomous agentic AI system for Cognitive Behavioral Therapy (CBT), such as the Agenti AI CBT, hinges on the availability of high-quality, diverse, and ethically sourced data. Recent advancements in AI for mental health underscore the need to leverage multiple data sources while addressing ethical and practical challenges in data collection and training. Part II of these series article details the types of data required, ethical considerations for their collection and use, and strategies to handle data scarcity and ensure quality, building on the foundational elements mentioned in this part af article series (e.g., Sections 3.2.2, 4.4, 4.5, and 4.6).

Part III of this article will build upon the conceptual framework established herein, delving into the intricate technical implementation details of the Autonomous Multi-Agent System for CBT . This subsequent part will explore the specific choices and integration of AI models for each specialized agent, including the underlying large language models (LLMs) and other machine learning algorithms employed for tasks such as NLU, sentiment analysis, cognitive distortion classification, and dialogue generation. Furthermore, Part II will detail the design of the Agent Communication Language (ACL), specifying the performatives and content languages that will facilitate seamless and robust inter-agent communication. The architecture for the User Model, including data structures for storing dynamic user profiles and mechanisms for personalization and adaptive learning, will also be elaborated. Finally, Part II Iwill address strategies for rigorous testing, validation, and ethical deployment of the system, outlining approaches to mitigate bias, ensure privacy, and establish accountability in a clinical context.

Important References

World Health Organization: Addressing Global Mental ... - UNA-NCA, accessed June 30, 2025, https://www.unanca.org/images/content/global-classrooms/FAQ-for-educators/WHO_BG_Winter_Training_2025.docx.pdf

Disability - World Health Organization (WHO), accessed June 30, 2025, https://www.who.int/news-room/fact-sheets/detail/disability-and-health

Impact of COVID-19 pandemic on mental health in the general population: A systematic review - PubMed Central, accessed June 30, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC7413844/

The Implications of COVID-19 for Mental Health and Substance Use ..., accessed June 30, 2025, https://www.kff.org/mental-health/issue-brief/the-implications-of-covid-19-for-mental-health-and-substance-use/

New study warns of risks in AI mental health tools | Stanford Report, accessed June 30, 2025, https://news.stanford.edu/stories/2025/06/ai-mental-health-care-tools-dangers-risks

(PDF) Challenges of Using AI in Contemporary Psychological Interventions - ResearchGate, accessed June 30, 2025, https://www.researchgate.net/publication/392969567_Challenges_of_Using_AI_in_Contemporary_Psychological_Interventions

Application of artificial intelligence tools in diagnosis and treatmentof ..., accessed June 30, 2025, https://czasopisma.umlub.pl/cpp/article/view/977

An Exploratory Investigation of Chatbot Applications in Anxiety ..., accessed June 30, 2025, https://www.mdpi.com/2078-2489/16/1/11

AI as the Therapist: Student Insights on the Challenges of Using Generative AI for School Mental Health Frameworks - MDPI, accessed June 30, 2025, https://www.mdpi.com/2076-328X/15/3/287

Ethical Considerations in Deploying Autonomous AI Agents - Auxiliobits, accessed June 30, 2025, https://www.auxiliobits.com/blog/ethical-considerations-when-deploying-autonomous-agents/

Multi-Agent Systems in AI: Challenges, Safety Measures, and Ethical Considerations, accessed June 30, 2025, https://skphd.medium.com/multi-agent-systems-in-ai-challenges-safety-measures-and-ethical-considerations-7a7636b971bd

What is Agentic AI? | Aisera, accessed June 30, 2025, https://aisera.com/blog/agentic-ai/

Agentic AI Vs AI Agents: 5 Differences and Why They Matter ..., accessed June 30, 2025, https://www.moveworks.com/us/en/resources/blog/agentic-ai-vs-ai-agents-definitions-and-differences

How we built our multi-agent research system - Anthropic, accessed June 30, 2025, https://www.anthropic.com/engineering/built-multi-agent-research-system

CBT Triangle: A Foundational Tool for Cognitive Behavioral Therapy - Blueprint, accessed June 30, 2025, https://www.blueprint.ai/blog/cbt-triangle-a-foundational-tool-for-cognitive-behavioral-therapy

The Positive CBT Triangle Explained (+11 Worksheets), accessed June 30, 2025, https://positivepsychology.com/cbt-triangle/

Cognitive Behavioural Therapy (CBT) Skills Workbook - Hertfordshire Partnership University NHS Foundation Trust, accessed June 30, 2025, https://www.hpft.nhs.uk/media/1655/wellbeing-team-cbt-workshop-booklet-2016.pdf

Cognitive behavioral therapy - Wikipedia, accessed June 30, 2025, https://en.wikipedia.org/wiki/Cognitive_behavioral_therapy

Unlocking Graph Databases in Cognitive Computing - Number Analytics, accessed June 30, 2025, https://www.numberanalytics.com/blog/ultimate-guide-graph-databases-cognitive-computing

AI-enhanced cognitive behavioral therapy with novel ontological and pattern-based thought segmentation - ResearchGate, accessed June 30, 2025, https://www.researchgate.net/publication/388340559_AI-enhanced_cognitive_behavioral_therapy_with_novel_ontological_and_pattern-based_thought_segmentation

Cognitive Restructuring Techniques: Fast, Positive Results, accessed June 30, 2025, https://dralanjacobson.com/cognitive-restructuring/

Mastering Cognitive Behavioral Therapy - Number Analytics, accessed June 30, 2025, https://www.numberanalytics.com/blog/mastering-cbt-for-negative-automatic-thoughts

Reframing Negative Thoughts with NLP - The MindPower, accessed June 30, 2025, https://themindpower.in/blog/reframing-negative-thoughts-with-nlp/

Learn the Art of Positive Self-Talk with NLP Techniques - NLP Training World, accessed June 30, 2025, https://nlptrainingworld.com/self-talk-with-nlp/

Socratic Questioning in Cognitive Behavioral Therapy (CBT) - Royal Life Centers, accessed June 30, 2025, https://chapter5recovery.com/what-is-socratic-questioning-during-cognitive-behavioral-therapy-cbt/

Cognitive Distortions: 15 Examples & Worksheets (PDF) - Positive Psychology, accessed June 30, 2025, https://positivepsychology.com/cognitive-distortions/

arxiv.org, accessed June 30, 2025, https://arxiv.org/pdf/1909.07502#:~:text=We%20define%20cognitive%20distortion%20detection,in%20text%20that%20is%20already

Automatic Detection and Classification of Cognitive ... - arXiv, accessed June 30, 2025, https://arxiv.org/pdf/1909.07502

Pattern Recognition in AI: A Comprehensive Guide - SaM Solutions, accessed June 30, 2025, https://sam-solutions.com/blog/pattern-recognition-in-ai/

Socratic wisdom in the age of AI: a comparative study of ChatGPT and human tutors in enhancing critical thinking skills - Frontiers, accessed June 30, 2025, https://www.frontiersin.org/journals/education/articles/10.3389/feduc.2025.1528603/full

A Novel Cognitive Behavioral Therapy–Based Generative AI Tool (Socrates 2.0) to Facilitate Socratic Dialogue - JMIR Research Protocols, accessed June 30, 2025, https://www.researchprotocols.org/2024/1/e58195/PDF

AI Powered Socratic Questioning: Four Prompts to Accelerate Change and Surface Hidden Resistance – - Emergent Journal, accessed June 30, 2025, https://blog.emergentconsultants.com/ai-powered-socratic-questioning-four-prompts-to-accelerate-change-and-surface-hidden-resistance/

Socratic Intelligence Challenge - AI Prompt - DocsBot AI, accessed June 30, 2025, https://docsbot.ai/prompts/education/socratic-intelligence-challenge

Behavioral activation - Wikipedia, accessed June 30, 2025, https://en.wikipedia.org/wiki/Behavioral_activation

Behavioral Activation: A Practical Tool for Treating Depression and Behavioral Avoidance, accessed June 30, 2025, https://www.blueprint.ai/blog/behavioral-activation-a-practical-tool-for-treating-depression-and-behavioral-avoidance

Opposite Action, Behavioral Activation, and Exposure - Cognitive Behavioral Therapy Los Angeles, accessed June 30, 2025, https://cogbtherapy.com/opposite-action-behavioral-activation-and-exposure

Behavioral Activation Worksheet: A Practical Tool for Rebuilding Motivation and Mood in Therapy - Blueprint, accessed June 30, 2025, https://www.blueprint.ai/blog/behavioral-activation-worksheet-a-practical-tool-for-rebuilding-motivation-and-mood-in-therapy

CBT Session Structure Outlines: A Therapist's Guide | Shortform Books, accessed June 30, 2025, https://www.shortform.com/blog/cbt-session-structure/

Structure of a CBT Session - YouTube, accessed June 30, 2025,

Algorithm‐based modular psychotherapy vs. cognitive‐behavioral therapy for patients with depression, psychiatric comorbidities and early trauma: a proof‐of‐concept randomized controlled trial - PubMed Central, accessed June 30, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11083959/

Digital and AI-Enhanced Cognitive Behavioral Therapy for Insomnia: Neurocognitive Mechanisms and Clinical Outcomes - MDPI, accessed June 30, 2025, https://www.mdpi.com/2077-0383/14/7/2265

Agent Communications Language - Wikipedia, accessed June 30, 2025, https://en.wikipedia.org/wiki/Agent_Communications_Language

Part 2: Agent Communication - UPC, accessed June 30, 2025, https://www.cs.upc.edu/~jvazquez/teaching/sma-upc/slides/sma02b-Communication.pdf

What are multi-agent systems? | SAP, accessed June 30, 2025, https://www.sap.com/resources/what-are-multi-agent-systems

Belief–desire–intention software model - Wikipedia, accessed June 30, 2025, https://en.wikipedia.org/wiki/Belief%E2%80%93desire%E2%80%93intention_software_model

L. Gabora and J. Bach, “A path to generative artificial selves,” in EPIA Conference on Artificial Intelligence, pp. 15–29, Springer, 2023.

G. Pezzulo, T. Parr, P. Cisek, A. Clark, and K. Friston, “Generating meaning: active inference and the scope and limits of passive ai,” Trends in Cognitive Sciences, vol. 28, no. 2, pp. 97–112, 2024.

Naveen Krishnan , 2025, “AI Agents: Evolution, Architecture, and Real-World Applications”

Meng Jiang et al , 2024, “A Generic Review of Integrating Artificial

Intelligence in Cognitive Behavioral Therapy”

Yuheng Cheng et al, 2024, “E XPLORING L ARGE L ANGUAGE M ODEL BASED I NTELLIGENT AGENTS : D EFINITIONS , M ETHODS , AND P ROSPECTS”

“Taicheng Guo”, 2024, “Large Language Model based Multi-Agents: A Survey of Progress and Challenges”

KHANH-TUNG TRAN et al, 2025, “Multi-Agent Collaboration Mechanisms: A Survey of LLMs”

Shunyu Yao et al, 2023, “REAC T: SYNERGIZING REASONING AND ACTING IN LANGUAGE MODELS “

Zhiheng Xi, et al, 2023, “The Rise and Potential of Large Language Model Based Agents: A Survey”