Model Drift: Identifying and Monitoring for Model Drift in Machine Learning Engineering and Production

“Drift” is a term used in machine learning to describe how the performance of a machine learning model in production slowly gets worse over time. This can happen for a number of reasons, such as changes in the distribution of the input data over time or the relationship between the input (x) and the desired target (y) changing.

Drift can be a big problem when we use machine learning in the real world, where data is often dynamic and always changing. This series of articles will deep dive into why models drift happen, different types of drift, algorithms to detect them, and finally, wrap up this article with an open-source implementation of drift detection in Python.

Machine learning models are trained with historical data, but once they are used in the real world, they may become outdated and lose their accuracy over time due to a phenomenon called drift.

Drift is the change over time in the statistical properties of the data that was used to train a machine learning model. This can cause the model to become less accurate or perform differently than it was designed to.

Types of Drifts

Model drift can take six primary forms. Some are obvious to detect, while others require a great deal of research and analysis to discover. Figure 1. gives a brief overview of these mechanisms of model degradation.

Feature Drift

Let’s imagine for a moment that our ice cream propensity-to-buy model uses weather forecast data in multiple regions. Let’s also pretend that we have no monitoring set up on our model and are using a passive retraining of the model each month.

When we were originally building the model, we did a thorough analysis of our features. We determined correlation values (Pearson’s and chi-squared) and found an astonishingly strong relationship between temperature and ice cream sales. For the first several months, everything is going well, with emails going out at defined intervals based on a propensity-to-open score of greater than 60%. All of a sudden, midway through June, the attribution models start to fall off a cliff.

The open and utilization rates are abysmally low. From a revenue lift of 20%, the test group is now showing a 300% loss. We continue to operate like this, with the marketing team trying different approaches to its campaigns. Even the product development team starts trying new flavors under the mistaken impression that customers are getting tired of the flavors that are on sale.

It’s not until a few months go by, when the DS team is informed that the project will likely get cancelled, that an in-depth investigation is undertaken. Upon investigation of the model’s predictions for the week starting in mid-June, we find a dramatic step-function shift in the probabilities for propensity-to-use coupons. When we look into the features, we find something a bit concerning, shown in Figure 2.

Even though this is a comical example of feature drift, it’s similar to many that I’ve seen in my career. I’ve rarely come across a DS who hasn’t had a data feed change unexpectedly without being notified, and many of those that I’ve experienced or heard about are as ridiculous as this example.

Many times, shifts like these will be of such a magnitude that prediction results become so unusable that it’s known within a short period of time that something substantial has changed. In some rare cases, such as the one shown in figure 2, a shift can be nuanced and difficult to detect for a longer period of time if automated monitoring is not in place.

For this use case, the prediction outputs would be making recommendations for customers enduring a new modern ice age event. The probabilities coming out of the model would likely be very low for most users. Since the post-prediction trigger for sending out recommendations from the model is set at 60% propensity, the much lower probabilities would result in the vast majority of customers in the evaluation test no longer receiving emails. With feature monitoring in place that is measuring the mean and standard deviation, simple heuristic control logic would have caught this.

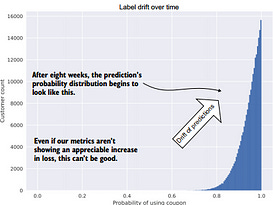

Label Drift

Label drift is a pretty insidious issue to track down. Typically caused by a shift in the distribution of several critical (high-importance) features, a drift in the label can work at cross-purposes to the desires of the business.

Let’s imagine that some aspect of our model for ice cream propensity starts to be affected by a latent force that we don’t fully understand due to a lack of data collection. We can see in the correlation what seems to be driving it, as it is universally reducing the variance of one of our feature values. However, we can’t conclusively tie one of our collected data features to the effects that we’re seeing. The main effect that we see is shown in Figure 3.

With this distribution shift, we could be looking at dramatic impacts to the business. From an ML perspective, the model’s accuracy (loss) could, theoretically, be better in the bottom scenario of Figure 3. than it was during initial training. This can make discovering events like this incredibly challenging; from a model training perspective, it could appear to be much better and ideal. However, from a business perspective, a drift event like this could prove disastrous.

What would happen if the marketing team had a threshold for sending the customized email coupons out only if the probability for using one was above 90%? Restrictions like this are usually in place because of costs (bulk sends are cheap, while customized solutions are far more expensive for services). If the marketing team based its threshold for sending on this level, having analyzed the results of the model’s predictions during the first few weeks of running, it would have selected an optimal cost-to-benefit ratio for these customized sends. With the label drift occurring over time in the second chart, this would mean that basically all of the test group customers would be entered into this program. This massive increase in cost could quickly make the project less palatable for the marketing department. If it were egregious enough, the team might just abandon its utilization of the project’s output entirely.

Paying close attention to the distribution of a model’s output over time can bring visibility to potential problems and ensure a certain degree of consistency to the output. When the results shift (and they will, let me assure you), be it in a seemingly positive or negative way, there could be follow-on effects from the results that the internal consumers of the model might not be prepared for.

It’s always best to monitor this. The impact to the greater scope of the business, depending on the sort of problem that you’re solving, could be severe if you’re not continually monitoring the state of the predictions coming out of the solution. An implementation of label drift monitoring should focus on the following:

For classification problems:

Define a time window for aggregation of prediction class values and store the counts of each.

Track the ratio of label predictions over time and establish acceptable deviation levels for the ratio values.

Perform a comparison of equivalency, utilizing an algorithm such as Fisher’s exact test with a very low alpha value (< 0.01), between recent values and the validation (test) metrics calculated during model generation.

(Optionally) Determine the probability mass function (pmf) of recent data and compare to the pmf of the validation predictions generated by the model during training. Comparison of pmf discrete distributions can be done with an algorithm such as Fisher’s noncentral hypergeometric test.

For regression problems:

Analyze distribution of recent predictions (number of days back, number of hours back, depending on the volume and volatility of predictions) by capturing mean, median, stddev, and interquartile range (IQR) of the windowed data.

Set thresholds for monitoring the values of interest from the measured aggregated statistics. When a deviation occurs, alert the team to investigate.

(Optionally) Determine the closest distribution fit to the continuous predictions and compare the similarity of this probability density function (pdf) through the use of an algorithm such as the Kolmogorov-Smirnov test.

Concept Drift

Concept drift is a challenging issue that can affect models. In simplest terms, it is an introduction of a large latent (not collected) variable that has a strong influence on a model’s predictions. These effects typically manifest themselves in a broad sense, changing most, if not all, of the features used for imputation by a trained model. Continuing with our ice cream example, let’s look at Figure 4.

These values that we measure and use for correlation-based training (weather data, our own product data, and event data) have been used to build strong correlations to propensities to buy ice cream during the week for individual customers. The latent variables that are beyond our ability to collect have a stronger influence over a person’s decision to buy than the data that we collect.

When unknown influences positively or negatively influence a model’s output, we may get a dramatic shift in either our predictions or in the attribution measurements for the model, which is the case here. Tracking down the root cause can be either quite obvious (a global pandemic) or insidious and complex (social media effects on brand image). We can go about monitoring this type of drift for our scenario as follows:

Implement metric logging for: 1) Primary model error (loss) metric(s)

2) Model attribution criteria (the business metric that the project is working to improve)Collect and generate aggregated statistics (over an applicable time window) for predictions: Counts (number of predictions, predictions per grouped cohort, etc.) , Mean, stddev, IQR for regressors, Counts (number of predictions, number of labels predicted) and bucketed

probability thresholds for classifiersEvaluate trends of aggregated statistics on predictions and attribution measurement over time. Unexplained drifts can be grounds for model retraining or a return to feature-engineering evaluation (additional features may be required to capture the new latent factor effects).

Regardless of the causes, it is important to monitor both potential symptoms of this issue: the model metrics associated with training for passive retraining, and the model attribution data for active retraining. Monitoring these shifts in model efficacy can help with early intervention, explainable analytics reports, and the ability to resolve the issue in a way that will not cause a disruption to the project as a whole.

The answer may not be readily apparent as with other types of drift (namely, feature and prediction drift). The critical aspect of production monitoring for this type of unexplained drift is that it is captured in the first place. Being blind to this potential impact to a model’s performance can have staggering effects on a business if left unchecked, depending on the use case. Creating these monitoring statistics through a simple ETL is always time well spent.

Prediction Drift

Prediction drift is highly related to label drift but has a nuanced difference that makes recovery from this type of drift follow an alternate set of actions. Like label drift, it affects the predictions greatly, but instead of being related to an outside influence, it’s directly related to a feature that is part of the model (although sometimes in a confounding manner).

Let’s imagine that our wildly successful ice cream company had, at the time of training our model solution, a rather paltry showing in the Pacific Northwest region of the United States. With a lack of training data, the model wasn’t well suited to adapt to the extreme minority of feature data associated with this region. Adding to this lack of data issue, we were unaware of whether potential future customers in this region

would like our product, because of the same dearth of information for exploratory data analysis (EDA).

After the first few months of running the new campaign, raising awareness

through word of mouth, it turns out that not only do people (and dogs) in the Pacific Northwest thoroughly enjoy our ice cream, but their behavior patterns turn out to match quite well with some of our most highly active customers. As a result, our model increases the frequency and rate at which coupons are issued to customers in this part of the country. Because of this increased demand, the model begins to issue so many coupons to customers in this region that we create an entirely new problem: a stock issue.

Figure 5. shows the effect on the business that our model has inadvertently

helped to create. While this isn’t a bad problem per se (it certainly drives up revenue!), an unexpected driver to the foundation of the business’s operation can introduce problems that will need to be solved.

The situation shown in figure 12.4 is a positive one indeed. However, the model’s impact in this case will not show up in modeling metrics. In reality, this would likely show as a fairly equivalent loss score, even if we were to retrain the model on this new data.

The attribution measurement analysis is the only way to detect this and account for the underlying shifts in the customer base moving forward.

Prediction drift, in general terms, is handled through the process of feature monitoring. This set of tooling involves many of the following concepts:

Distribution monitoring of priors for each feature compared to recent values, lagged by an appropriate time factor:

Calculate mean, median, standard deviation, IQR for the feature as it was at training time.

Compute recent feature statistical metrics that are being used for inference.

Calculate the distance or percentage error between these values.

If the delta between these metrics is above a determined level, alert the team.

Distribution equivalency measurement:

Convert continuous features to a probability density function (pdf) for the features as they were during training.

Convert nominal (categorical) features to a probability mass function (pmf) for the features as they were during training.

Compute the similarity between these and the most recent (unseen-to-themodel through training) inference data utilizing algorithms such as the Wasserstein metric or Hellinger distance.

Specifying statistical process control (SPC) rules for basic statistical metrics for each feature:

Sigma-based threshold levels whereby a smoothed value of each continuous

feature over time is measured (typically, through a moving average or window aggregation) and alerted on when selected rules are violated. Western

Electric rules are generally used for this.SPC rules based on scaled percentage membership of categorical or nominal

values within a feature (aggregated as a function of time).

Whichever methodology you choose to utilize (or if you’d like to choose to do all of them), the most critical aspect of collecting information about the state of the features during training is that it allows for monitoring and having advance notice of feature degradation.

Reality Drift

Reality drift is a special case of concept drift: while it is an outside (unmeasured and unforeseen) influence, these foundational shifts can have a much more profound and large-scale impact on the effectiveness of a model than general concept drift. Not only pandemics cause reality drift, though. Horseshoe manufacturers, after all, would have had similar issues with predicting demand accurately during the first few decades of

the 20th century.

Events such as these are foundationally transformative and disruptive, particularly when they are black swan events. In the most severe cases, they can be so detrimental to businesses that a malfunctioning model is the worst of their worries; the continued existence of the company is far more pressing of a problem.

For more moderate disruptive reality drifts, the ML solutions that are running in production are generally hit pretty heavily. With no ability to recognize which new features can explain the underlying tectonic shifts in the business, adapting solutions to handle large and immediate changes becomes a temporal problem. There simply isn’t enough time or resources to repair the models (and sometimes, not even the ability to collect the data needed).

When these sorts of foundational paradigm-shifting events happen, models affected by the change in the state of the world should face one of two fates:

Abandonment due to poor performance and/or cost-savings initiatives

Model rebuilding after extensive feature generating and engineering

What you absolutely should never do is quietly ignore the problem. The predictions are likely to be irrelevant, retraining on original features blindly is not likely to solve the problem, and leaving poor-performing models running is costly. At the bare minimum, a comprehensive assessment of the nature and state of the features going into

models needs to be undertaken to ensure that the validity is still sound. Without approaching these events in this thorough manner of validation and verification, the chances that the model (and other models) is allowed to continue to produce unvetted results for very long are slim.

Feedback Drift and the Low of Diminishing Returns

A form of drift less spoken of is feedback drift. Imagine that we’re working on a modeling solution for estimating a defect density on a part manufactured in a factory. Our model is a causal model, with our production recipes being built in such a way that it reflects a directed acyclic graph that mirrors our production process. After running through this Bayesian modeling approach to simulate the different effects of changing parameters to the end result (our yield), we find ourselves with a set of seemingly optimal parameters to put into our machines.

At first, the model shows relationships that do not result in optimal outcomes. As we explore the feature space further and retrain our model, the simulations more accurately reflect the expected outcome when we initiate tests. Our yields stabilize to nearly 100% over the first few months of running the model and utilizing the simulation’s outputs.

By controlling for the causal relationships present in the system that we’re modeling, we’ve effectively created a feedback loop in the model. The variances of allowable parameters to adjust shrink, and were we to build a supervised machine learning model for validation purposes on this data, it wouldn’t learn very much. There simply isn’t a signal to learn from anymore (at least not one worth much).

This effect isn’t present in all situations, as causal models are more heavily affected by this than correlation-based traditional ML models are. But in some situations, the results of the predictions of a correlation-based model can contaminate our new features coming in, thereby skewing the effects of those features that were collected with the observed result that actually occurred. Churn models, fraud models, and recommendation engines are all highly susceptible to these effects (we are directly manipulating the behavior of our customers by acting on the predictions to promote positive

results and minimize negative results).

This is a risk in many supervised learning problems, and it can be detected by evaluating the prediction quality over time. As each retraining happens, the metrics associated with the model should be recorded (MLflow Tracking is a great tool for this) and measured periodically to see if degradation occurs on the inclusion of new feature data to the model. If the model is simply incapable of returning to acceptable levels of loss metrics based on the validation data being used for recent activity, you may be in the realm of diminishing returns.

Sure, here’s an improved section on the potential consequences of model drift and its impact on model performance for your article:

Deeper Dive: Consequences of Model Drift

While we’ve explored the different types of model drift, it’s crucial to understand how these shifts can negatively impact a model’s performance. Here’s a closer look at the potential consequences:

Decreased Accuracy and Reliability: Model drift can lead to a decline in the accuracy and reliability of predictions made by the model. As the model encounters data that deviates from its training distribution, its ability to generalize and make accurate predictions on new data suffers. Imagine a spam filter trained on past email patterns. If spammers change their tactics and use new techniques, the filter might start misclassifying legitimate emails as spam due to model drift.

Biased and Unfair Outputs: Certain types of drift, like concept drift, can introduce biases into the model’s outputs. If the underlying concepts the model learned from the training data become outdated or irrelevant, its decisions might become biased towards patterns present in the older data. For instance, a loan approval model trained on historical data might unfairly disadvantage new loan applicants due to concept drift in the economic climate.

Degraded Decision-Making: Machine learning models are increasingly used for critical decision-making processes. If a model’s performance degrades due to drift, it can lead to poor decision-making with potentially significant consequences. For example, a stock trading model experiencing drift could make unprofitable investment decisions.

Loss of Trust and Reputation: When models consistently deliver inaccurate or misleading outputs, it can erode user trust and damage the reputation of the system or organization relying on the model.

By understanding these potential consequences, it becomes evident why proactively monitoring and mitigating model drift is crucial for maintaining the effectiveness and reliability of machine learning systems.

This revised section emphasizes the real-world impact of model drift and provides relatable examples to illustrate the potential consequences. It strengthens the overall message about the importance of addressing model drift in machine learning projects. In part II of these series we will focus on corrective actions for drifts and will present more examples with python code and open sources.

Some References

Firas Bayram et al. “From Concept Drift to Model Degradation: An Overview on Performance-Aware Drift Detectors” (2022) .

Samuel Ackerman et al. “Automatically detecting data drift in machine learning classifiers”. In: Association for the Advancement of Artificial Intelligence, 2019.

Automated Data Drift Detection For Machine Learning Pipelines, Serop Baghdadlian

Berkman Sahiner et al. “Data drift in medical machine learning: implications and potential remedies” ,2023, PMCID: PMC10546450 PMID: 36971405

Rieke Müller et al. “Open-Source Drift Detection Tools in Action: Insights from Two Use Cases”, 2024